Problem

One way of authenticating Databricks APIs to Azure platform is using a service principal with permissions on Azure AD.

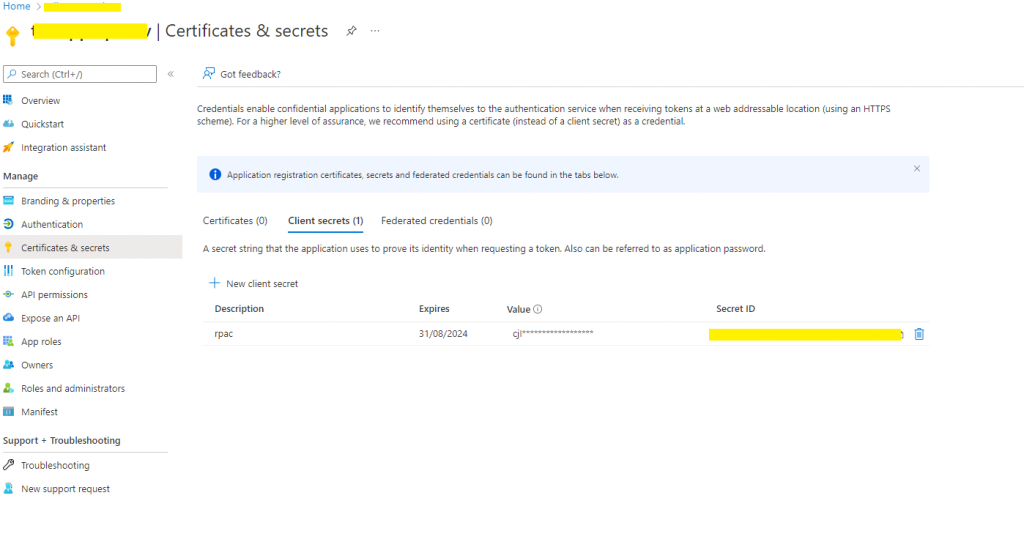

In case a client secret is chosen for authentication, Azure forces an expiry date on the secret. When the secret expires the Databricks loses visibility to Azure with an error like below. This error can happen where dbutils.fs commands are running:

AADSTS7000215: Invalid client secret provided. Ensure the secret being sent in the request is the client secret value, not the client secret ID, for a secret added to app

In theory, the creation a new secret and providing the new secret to Databricks code should fix the issue. However in my case I kept receiving the error even after creation of new secret

Solution

In my case Databricks was caching the mounting metadata and following steps were required to fix the issue:

- Run fsutils.refreshMounts

- Run fstuils.unmount for all mounted paths

- Run fsutils.mount for all mounted paths.